Even though I mostly sit at work trying to look busy, every so often someone does stumbles into my office with a question or a problem so I’ve got to do something.

Interestingly enough, a lot of problems can be handled by some pretty basic stuff like like reminding people that a .jar/war file is a zip file and you can take a look inside for what’s there or what’s missing; or sending people to read the log files (turns out these buggers actually contain useful information) etc. – so now for today’s lesson: “It’s open source, so the source, you know, is open…”

We use a lot of open source projects at Nice (we’ve also, slowly, starting to give something back to the community but that’s another story). One of these is HBase, one of our devs was working on enabling and testing compression on HBase. looking at the HBaseAdmin API (actually the column descriptor) he saw there was the option for setting the compression of a column family and an option for setting compression of compaction. The question he came with was do I know how it behaves when you set one and not the other and how they work together.

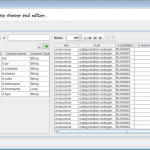

Well I know about HBase compression but I didn’t hear about compaction compression and the documentation on this is, well, lacking. Luckily HBase is an open source project, so I took a peek. I started with hfile.java which reads and writes HBase data to hadoop. well, it seems that the writer gets a compression algorithm as a parameter and that the reader gets the compression algorithm from the header. so essentially different hfiles can have different compressions and HBase will not care. We start to see the picture but to be sure we need to see where the compression is set. So we look in the regionserver’s Store.java file and we see :

Bottom line reading through HBase code I was able to understand exactly how the feature in question behaves and also get a better understanding of the internal workings of HBase (HFile descibe their own structure so different files can have different attributes like compression etc.)

Another example for how reading code can help is using Yammer’s monitoring library metrics. Building the monitoring solution for our platform we also collect JMX counters (like everybody else I guess :) ).So I stumbled upon metrics and the manual did a good job of showing the different features and explaining why this is an interesting library. I asked one of our architects to POC it and see if it is a good fit. He tried but it so happens that it is rather hard to understand how to put everything together and actually use it just from the documentation. Luckily metrics code has unit tests (not all of it by the way, which is a shame, but at least enough of it) e.g. the following (taken from here) that shows how to instrument a jersey service:

Again, we see that having the code available is a great benefit. You don’t have to rely on documentation being complete (something we all do so well, but those other people writing code don’t so, you know..) or hoping for a good samaritan to help you on stack overflow or some other forum. and that’s just from reading the code… imagine what you could do if you could actually offer fixes to problems you encounter but, oh wait, you can…

Ok, I think I’ve done enough for today, got to get back to trying to look busy

illustration licensed under creative commons attribution 2.5 by opensource.org

[…] perfect at times but luckily all the relevant technologies are open source (even the Amazon SDK), so you can always dive into the bug reports, code etc. to understand how things actually work and find the solutions you need. Also, from time to time […]