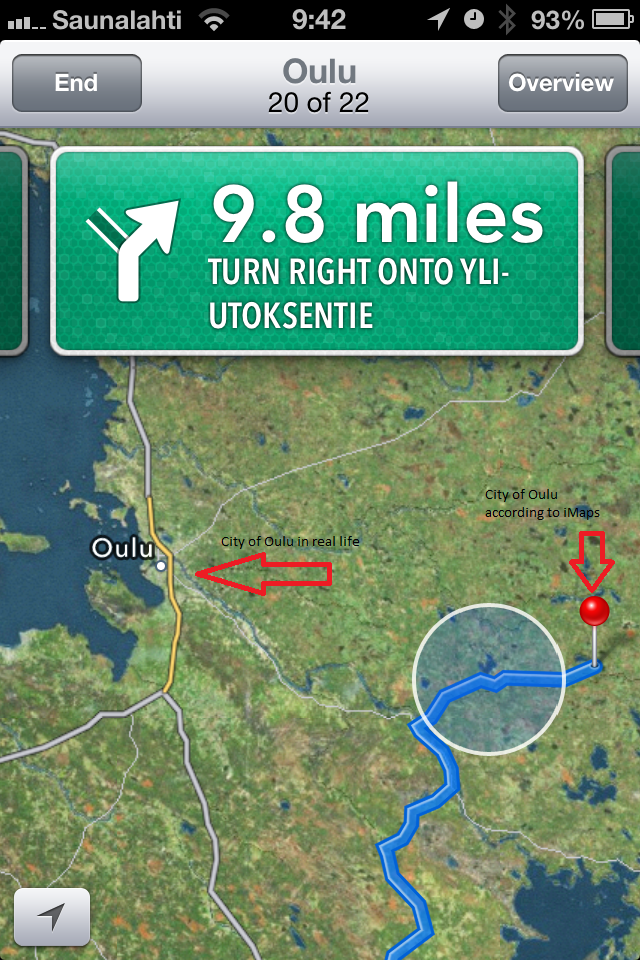

By now you’ve probably heard something about Apple’s new iOS6 maps app. In case you’ve been living under a rock, it turns out the new and shiny application that replaces Google maps in the new iOS release produces a lot of inaccuracies, mangled graphics, navigation errors and what not (just like the image you see on the left – for more examples you can see this site). Kidding (or gloating) aside, this debacle carries with it a few important lessons that anyone who is building a big data project should keep in mind.

Apple took data from various sources like Waze, Tomtom, yelp and others to build their database. thinking that it is all just geographical data using the same coordinate system so everything should be just fine. Well, it doesn’t work like that – out first and probably most important lesson is It isn’t just about getting all the data there. When you build a big data project and you amass the data from all the possible sources. Don’t think that you’d automagically achieve analytics nirvana. If you don’t take care to check the data, understand the connections between the different entities and really understand what you have there – you’d just end up with a big pile of data.

A related lesson we should take away from this is that it isn’t just one pile of data. In GIS or mapping applications it seems it is all just “geographic data” but in reality there are different types of data we need to take care of such as raster maps (e.g. satellite images) , vector maps (e.g. roads, rivers) , digital terrain models (3d models), points of interest (e.g. gas stations, malls)etc. each can be in different scale and datum, each needs to be handled differently. The same is true for your project it has its own domain and its own entities. Each entity needs to be handled differently and they all need to work together. I personally think a good way to handle this is to apply SOA thinking with big data (if you’re in Israel, I’ll be speaking about just that next month), but regardless of how you handle it – remember that you should.

Another thing that apparent is that Apple relied mainly on algorithms to stitch together all the data from the many sources they’ve used. The takeaway here is that Algorithms are cool and everything but humans should oversee the process or as Ronald Reagan said it Trust but verify. We all build our predictive models, recommendation engines and nifty clustering algorithms – we should really make sure the results mesh well together. For instance in our system we work on multi-channel interactions (of people with organisations such as call centers etc.), one of the channels we have is voice calls another is feedback on interactions – if we just cluster call transcripts with given feedback (to get back interactions’ root causes) without understanding the meaning of these different channels the outcome looks awesome and everything but it is otherwise meaningless. Again, the algorithms are important but we need to look at what they’re producing and understand the data they are working on.

Another issue, which is more of a guess based on the outcome (Update: it seems it isn’t just a guess), is that you need to hire the right people for the job. I’d venture to say that Apple underestimated the complexities of producing a global maps service and didn’t hire enough GIS experts to make sure this would work well at the needed scale (scale of data not scale of users). You need to understand the nature of your problem and get qualified experts on that subject – are you mainly about text analytics? is your problem mainly data refining and quality? Is it about statistics models? something else? – Make sure you understand what your problems are and get people that can solve them – make sure you have enough of those to go around.

The last lesson learned is not specific to big data project and is probably true for any software project – don’t underestimate QA and testing. It is obvious that Apple didn’t do enough of that and that the real testers are iOS users who are taking the maps out for a ride (pardon the pun). Testing is important, quality assurance, vetting data quality are important. Automated testing is really important but, as mentioned above, don’t forget to put some humans in the loop as well.